-

产品及解决方案

-

服务

-

产品及解决方案

-

服务

-

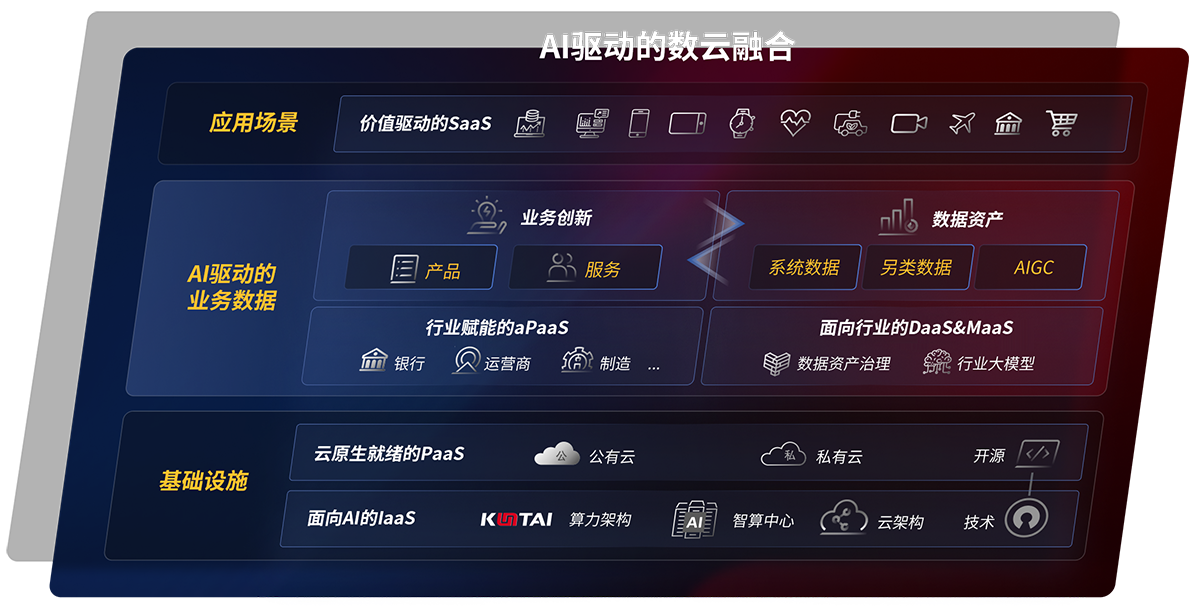

云原生产品及应用平台

- 超凡国际研云 研制一体化工业互联网解决方案

- 磐云 云原生PaaS平台

- 超凡国际云印 数字化文印管理平台

- 超凡国际视讯 融合会议服务平台及应用

-

云服务

京公网安备 11010802037792号

京公网安备 11010802037792号